Your cart is currently empty!

Understanding Bits and Bytes

I often encounter misconceptions about the basic building blocks of digital information: bits and bytes. Let’s clear up these concepts to better understand how computers process data.

This knowledge is not only fundamental but essential for anyone stepping into the field of technology.

What Are Bits?

A bit is the smallest unit of data in computing. It’s a binary digit that can hold one of two values: 0 or 1.

These values are often used to represent off or on in digital electronics. Think of a bit as a tiny switch that can either be in the off position (0) or the on position (1).

Here’s a simple table to illustrate a single bit’s possible states:

| Bit Value | State |

|---|---|

| 0 | Off |

| 1 | On |

How Bits Combine into Bytes

When bits combine, they create a more meaningful unit of data called a byte.

One byte consists of 8 bits.

With 8 bits, or one byte, you can represent 256 different values (2^8 = 256). This is why bytes are the basic addressable element in many computer architectures.

To better understand this, let’s look at how bits combine to form a byte:

| Byte (8 bits) | Binary Value | Decimal Equivalent |

|---|---|---|

| 00000000 | 0 | 0 |

| 00000001 | 1 | 1 |

| 00000010 | 2 | 2 |

| 00000011 | 3 | 3 |

| 00000100 | 4 | 4 |

| 00000101 | 5 | 5 |

| 00000110 | 6 | 6 |

| 00000111 | 7 | 7 |

| 00001000 | 8 | 8 |

| 00001001 | 9 | 9 |

| 00001010 | 10 | 10 |

| 00001011 | 11 | 11 |

| 00001100 | 12 | 12 |

| 00001101 | 13 | 13 |

| 00001110 | 14 | 14 |

| 00001111 | 15 | 15 |

| 00010000 | 16 | 16 |

The Significance of Bytes in Computing

A byte consists of eight bits, where each bit can be either a 0 or a 1. These bits, when combined in groups of eight, allow for 256 different combinations (from 00000000 to 11111111)

Bytes are fundamental to computing because they are the standard chunk of data used by many computer systems to represent a character such as a letter, number, or another symbol.

In modern computing, multiple bytes may be used to represent more complex information like an emoji or character in non-Latin alphabets.

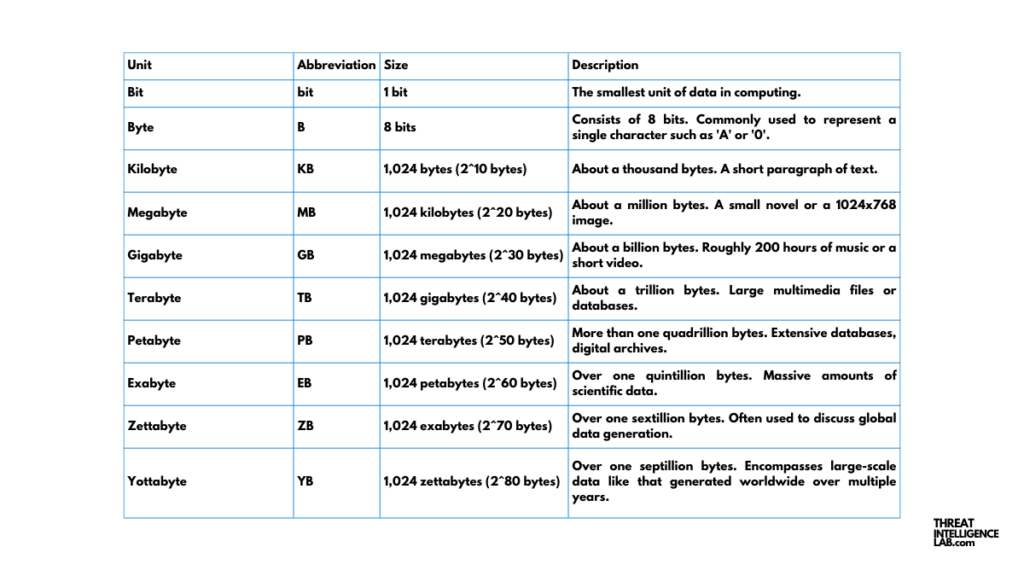

From Bytes to Larger Units: Kilobytes, Megabytes, and Beyond

As we handle larger amounts of data, we scale up from bytes to kilobytes, megabytes, gigabytes, and so forth.

Bytes Build the Digital World

In conclusion, bits and bytes are the alphabets of the computer world. Knowing how they work together to form complex data structures helps us comprehend and appreciate the complexity and beauty of modern computing. For anyone aspiring to master digital technology, grasping these concepts is a fundamental step.

- Why Cybercriminals Chase Your Personal Information

- Software Supply Chain Attacks: Insights and Defense Strategies

- Types of Data Cybercriminals Sell on the Dark Web

- Understanding Threat Intelligence Platforms (TIPs)

- How Actionable Threat Intelligence Helps in Incident Response

I recommend that you experiment with simple coding exercises to manipulate bits and bytes. The best way to get results and deepen your understanding of digital data is by practical application.

Whether you are coding a simple application or trying to break down how a particular piece of software works, your knowledge of bits and bytes will be invaluable.